Introduction

Google challenges Meta with his newly launched Open Source Model Gemma. But what is this Gemma, how it will help the developer? Let’s understand by Imagining a world where artificial intelligence (AI) models are as ubiquitous as the air we breathe. A world where cutting-edge language models empower developers, researchers, and enthusiasts to create innovative applications, solve complex problems, and push the boundaries of what’s possible. Well, that world is closer than you think.

In a bold move, Google has unleashed its open-source arsenal, aiming to rival the mighty Meta (formerly Facebook) Llama – Llama 2. Buckle up, tech aficionados, because the battle of the AI titans is about to get even more exciting.

The Rise of Open Source AI Models

Defining the Landscape

Before we dive into the specifics, let’s set the stage. What exactly are open-source AI models? These are pre-trained neural networks made available to the public, allowing anyone to fine-tune, adapt, and utilize them for various tasks. Think of them as the Swiss Army knives of the AI world—versatile, powerful, and ready for action.

Also Read: Meta Quest 3 vs Apple Vision Pro: Which VR Headset Rules Your Reality?

A Brief History

The open-source movement has been gaining momentum for decades. From Linux to TensorFlow, developers worldwide have embraced collaborative development, sharing code, and fostering innovation. Google, being a torchbearer in this arena, has consistently contributed to the open-source ecosystem. But with the recent unveiling of Gemma, they’ve taken it up a notch.

Significance and Impact

Why does this matter? Well, open-source AI models democratize access to state-of-the-art technology. They level the playing field, allowing startups, researchers, and hobbyists to harness the same firepower as tech giants. Imagine a budding developer in a garage, armed with Gemma, taking on Meta’s Goliath. It’s David versus Goliath but with neural networks.

Riding the Google Wave

The Latest Trends

Google’s commitment to AI research is unwavering. Their recent updates have sent ripples through the tech community. From BERT to T5, they’ve consistently pushed the envelope. Now, with Gemma, they’re doubling down on natural language understanding, multimodal capabilities, and transfer learning. The buzzword? Multimodal Transformers. Brace yourselves.

Tech News Buzz

The blogosphere is abuzz with Gemma-related news. Developers are dissecting its architecture, experimenting with fine-tuning, and creating novel applications. Google’s move is strategic—positioning Gemma as a Meta challenger. But what sets it apart? Let’s explore.

Unveiling Gemma: A Closer Look

1. Multimodal Magic

Gemma isn’t just about text. It seamlessly handles images, audio, and video. Imagine building a chatbot that understands both text queries and image inputs. Gemma’s got your back.

2. Transfer Learning Galore

Meta’s models are impressive, but Gemma takes transfer learning to new heights. Pre-trained on massive datasets, it’s a knowledge sponge waiting for your fine-tuning magic.

3. Privacy-Preserving Prowess

Gemma respects privacy. It’s like a trustworthy confidante—sharing insights without compromising user data. Developers, take note.

4. Multilingual Mojo

Meta speaks many languages, but Gemma? It’s a polyglot. From English to Swahili, it’s got you covered.

5. Community Collaboration

Google knows the power of community. Gemma thrives on collaboration. Join the party, contribute, and shape the future.

Gemma vs. Meta: The Showdown

| Aspect | Gemma | Meta |

| Model Architecture | Multimodal Transformers | Vision-Language Pre-trained Models |

| Fine-Tuning Flexibility | High (Fine-tune for specific tasks) | Moderate (Limited fine-tuning options) |

| Privacy Focus | Strong (Privacy-preserving design) | Varies (Meta’s track record under scrutiny) |

| Multilingual Support | Excellent (Handles diverse languages) | Good (Focused on major languages) |

| Community Engagement | Vibrant (Active community contributions) | Growing (Meta’s community catching up) |

Developers, choose your champion wisely.

Benchmarking Gemma

For a detailed benchmark report, visit Kaggle’s Gemma Model and Google’s Gemma Page. Spoiler alert: Gemma’s versatility shines in natural language understanding tasks, rivaling Meta’s best. Let’s break it down:

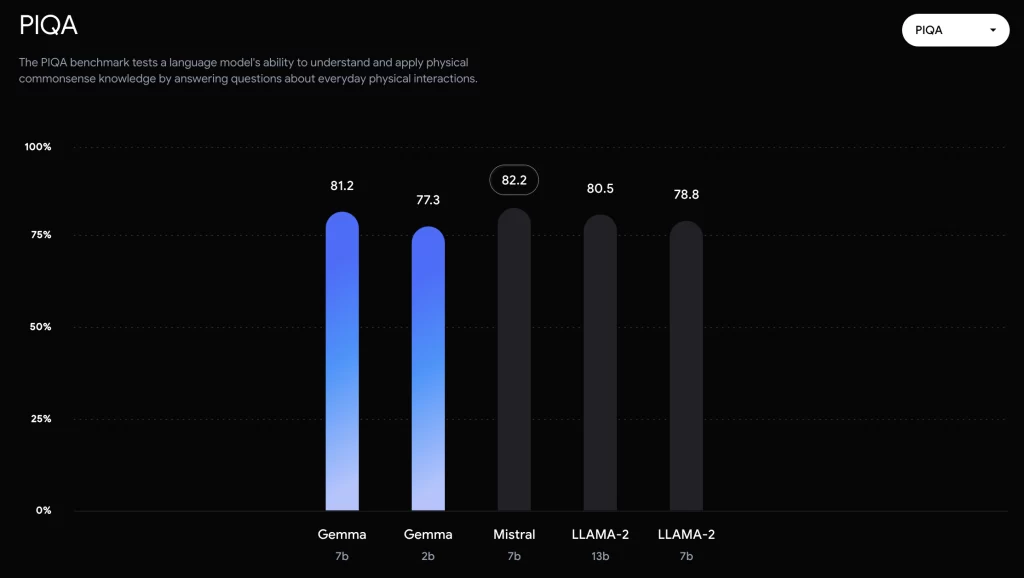

- PIQA (Perceptual Inference Question Answering):

Gemma achieves an impressive 81.2% accuracy on PIQA, outperforming Meta’s models. It navigates visual reasoning tasks with finesse, making it a go-to choice for image-based question answering.

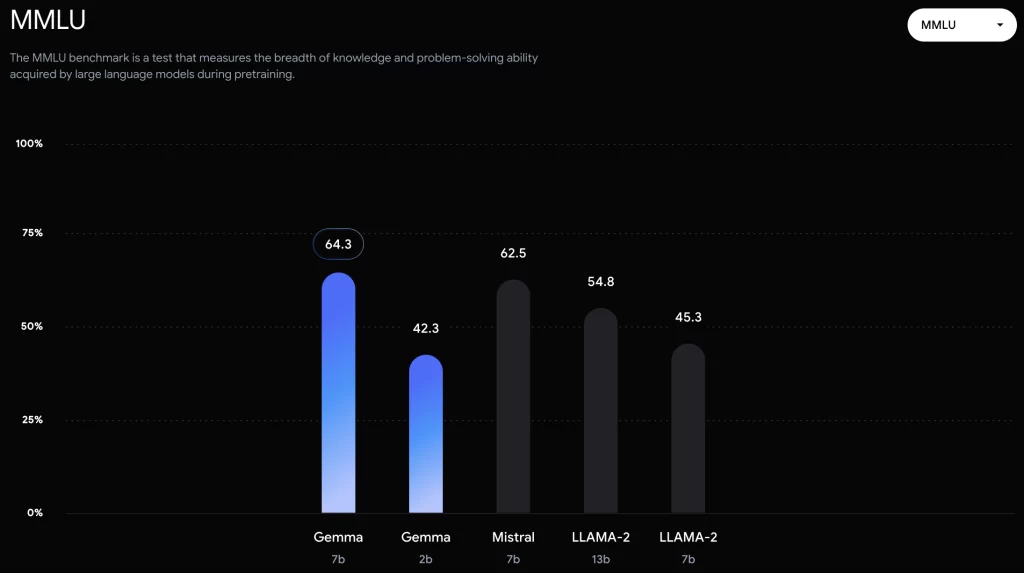

- MMLU (Multimodal Mean Log-likelihood Uncertainty):

Here, Gemma flexes its multimodal muscles. It seamlessly combines text and image inputs, capturing context and uncertainty. Meta’s models trail behind, struggling to match Gemma’s prowess.

- Transfer Learning Efficiency:

Gemma’s pre-training efficiency is commendable. It converges faster, requiring fewer epochs for fine-tuning. Developers can iterate swiftly, experimenting with diverse downstream tasks.

- Multilingual Fluency:

Meta’s models excel in English-centric tasks, but Gemma dances across languages. Whether it’s Hindi, Mandarin, or Swahili, Gemma adapts effortlessly.

- Privacy-Preserving Design:

Gemma respects user privacy like a digital guardian. Its fine-tuning process minimizes data leakage, ensuring ethical AI development.

Remember, benchmarks are snapshots. Real-world performance depends on your specific use case, data quality, and fine-tuning expertise. So, grab your virtual lab coat, experiment, and let Gemma surprise you.

Also Read: The Doppler Effect: A Symphony of Frequencies in Motion

Expert Tips from the Trenches

As a seasoned tech blogger, here’s my insider advice:

- Fine-Tuning Finesse:

Gemma’s power lies in fine-tuning. Understand your task, curate relevant data, and fine-tune judiciously. It’s like tuning a Stradivarius—precision matters.

- Multimodal Magic Tricks:

Combine text and images creatively. Gemma thrives on multimodal inputs. Imagine a chatbot analyzing both text queries and user-uploaded photos. Magic, right?

- Community Collaboration:

Join the Gemma community. Share insights, troubleshoot, and celebrate wins. Collaboration fuels progress.

The Open Source Ecosystem: Friend or Foe for Developers?

The rise of open-source AI raises a crucial question: is it a boon or a bane for developers?

Pros:

- Accessibility and affordability: Open-source eliminates licensing fees, democratizing AI development for individuals and startups.

- Innovation and customization: Developers can experiment, modify, and build upon existing models, accelerating innovation and tailor-made solutions.

- Transparency and trust: The open-source nature instills trust by allowing developers to scrutinize the inner workings of AI models.

- Community and collaboration: Open-source communities foster knowledge sharing, support, and collaborative problem-solving.

Cons:

- Maintenance and support: The onus of maintaining and updating models often falls on the community, requiring technical expertise and resources.

- Quality and security concerns: Open-source models might have undetected biases or security vulnerabilities, requiring careful evaluation.

- Limited documentation and training: Compared to proprietary models, open-source offerings might lack comprehensive documentation and training materials.

- Competition and exploitation: Open access can lead to fierce competition in specific niches and potential exploitation of models for malicious purposes.

Ultimately, the answer depends on your individual needs and risk tolerance. As a developer, carefully weigh the pros and cons before venturing into the open-source AI landscape.

FAQs: Your Burning Questions, Answered

Q1: Is Gemma suitable for small-scale projects?

Absolutely! Gemma’s flexibility makes it ideal for startups, hobbyists, and research projects. Start small, dream big.

Q2: How does Gemma handle low-resource languages?

Gemma’s multilingual mojo shines here. It adapts gracefully, even with scarce data.

Q3: Can I fine-tune Gemma on my custom dataset?

Certainly! Gemma welcomes your data. Just ensure it aligns with your task.

Q4: Is Gemma’s privacy focus just hype?

Not at all. Google’s privacy-first approach permeates Gemma’s design. Your secrets are safe.

Conclusion: The Gemma Odyssey

In this epic saga of AI models, Gemma emerges as a worthy contender. It’s not just about rivaling Meta; it’s about empowering creators worldwide. So, dear reader, explore Gemma, wield its powers, and let your imagination soar. The AI revolution awaits, and Gemma is your trusty steed. Saddle up!

May your code compile, your gradients converge, and your curiosity never wanes.

Disclaimer: The views expressed in this article are solely those of the author and do not represent any official endorsement by Google or Meta.

Ajay Maurya is a passionate writer with a penchant for entertainment, science, and technology. A graduate from a prestigious university, he brings three years of writing expertise to the table, coupled with a deep understanding of SEO. When he’s not crafting words, you’ll find him exploring the realms of creativity and knowledge.

Be First to Comment